by Dmitry Kirsanov

18. April 2012 22:45

About 5 months ago, I made a video about configuring the network load balancing cluster in Windows Server 2008. I am continuing the series about clustering the Windows Server 2008 with the next type of clusters – the failover cluster. Also known as “high availability” cluster.

Although Windows Server 2008 supports 4 types of clusters – Network Load Balancing, Failover, Computational and Grid, the most commonly used are the first two. Also, we’ll talk about the private clouds later, as they are doing similar job, but in Windows Server 2008 the private cloud is the functionality of an application called System Center Virtual Machine Manager 2012, so it’s not the system core feature, such as clustering.

During the series of demos we’ll talk mainly about failover and network load balancing clusters, as the High Performance Computational cluster requires it’s own special edition of Windows Server 2008, called Windows Server 2008 R2 HPC Edition, and chances are – you won’t ever have the requirement to set up such environment.

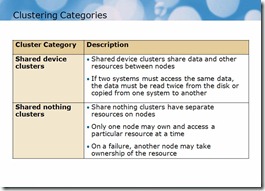

Windows Server 2008 Cluster Categories

As you can see in the following slide, there are two categories of clusters by the way they share resources.

Failover cluster belongs to the second group, which means that it is a group of computers, where only one node (i.e. the machine participating in the cluster) owns the resource. You may have two or more machines working as nodes in your failover cluster, but only one of them will serve clients at any moment of time. Once that machine fails, another node takes ownership of resources (shared drive, for example) and starts serving clients instead of the failed node.

More...

by Dmitry Kirsanov

16. April 2012 16:00

The Long Tail theory in Search Engine Optimization is a reflection of an older Long Tail concept which was developed in the middle of 20th century and which basically states, that you can either focus on a few very popular things or many unpopular, but the accumulated popularity of the latter, reinforced by higher specialization and increased diversity, will make it more stable investment of your money and time.

The Long Tail theory in Search Engine Optimization is a reflection of an older Long Tail concept which was developed in the middle of 20th century and which basically states, that you can either focus on a few very popular things or many unpopular, but the accumulated popularity of the latter, reinforced by higher specialization and increased diversity, will make it more stable investment of your money and time.

In other words, instead of trying to moderately please everyone, try to highly please few diverse groups of customers. If one product fails, it won’t become a catastrophe, because you have other products. And because the product is “aimed” at something special – be it function or group of customers, it will be considered “professional” even if it’s not better than more generic or more complex products.

More...

by Dmitry Kirsanov

16. April 2012 05:39

Scott Hanselman is a senior program manager in the developer division (whatever that means) at Microsoft. In other words, he is one of the primary sources of information regarding Microsoft Visual Studio.

Scott Hanselman is a senior program manager in the developer division (whatever that means) at Microsoft. In other words, he is one of the primary sources of information regarding Microsoft Visual Studio.

He has quite interesting post about features of Visual Studio 11 which presumably were not noticed by the community. Until his post, anyway.

What I find especially interesting is that Visual Studio 11 Express edition is going to provide unit testing feature – something that wasn’t available in free Visual Studio editions before. This correlates to Visual Studio 11 Team Foundation Server, which got it’s free edition as well, making Visual Studio much harder to beat even if the budget is tight. I suppose this could lead to improved quality of other development environments.

More...

by Dmitry Kirsanov

10. April 2012 23:41

Here’s some morning fun for security experts out there.

A few days ago I needed to arrange a payment to Microsoft. The credit card used in transaction wasn’t available the week after transaction, when the company decided to charge it. Not a big deal, I only needed to provide the details of an alternate card. Here is the fragment of an e-mail I’ve got regarding the issue:

“Due to security policy, we strongly recommend you send these details via fax or attached to an e-mail. Please do not type these details in the e-mail body. If you wish, you can provide us with these details via phone.”

More...

by Dmitry Kirsanov

10. April 2012 19:45

Remember the Matrix? The movie by Wachowski brothers that shook the world in 1999. There was a concept of accelerated training, when knowledge was simply uploaded to your brain. In a matter of seconds you could learn Kung-Fu or how to control the helicopter. Now, what was the most attractive in that concept?

Remember the Matrix? The movie by Wachowski brothers that shook the world in 1999. There was a concept of accelerated training, when knowledge was simply uploaded to your brain. In a matter of seconds you could learn Kung-Fu or how to control the helicopter. Now, what was the most attractive in that concept?

The fact, that you wouldn’t have to fight with yourself day after day, until you would eventually give up and abandon the training, burying the dreams about the black belt or the license.

Imagine, if tribe leaders would just gather and sign the peace treaty, without starting the war. That’s what it is, the real training – a war of your future against your past. A civil war inside of you. When one part of your brain screams that it is much safer to sit in the trench or retreat, while other objects, that if you don’t follow your dream now, it will turn into the nightmare later.

More...

by Dmitry Kirsanov

9. April 2012 16:41

Not long ago one acquaintance of mine, an HR manager, said that she doesn’t believe that I’ve deleted a small document I’ve created a year ago for my own needs. A list of 20 questions for beginner software developers. I wouldn’t ever consider it an asset.

Not long ago one acquaintance of mine, an HR manager, said that she doesn’t believe that I’ve deleted a small document I’ve created a year ago for my own needs. A list of 20 questions for beginner software developers. I wouldn’t ever consider it an asset.

She couldn’t believe I was able to delete so important and useful thing.

I tried to recall what else I’ve either deleted or abandoned during my life as professional, and who would consider THAT as an asset. And the scale of what I’ve seen in my vision led me to obvious, but perhaps unwritten law of software developers. The law of the draft.

In short, I believe that you should always have at least one project ongoing, and it shouldn’t be anything related to your job, as well as you should not consider to obligatory release this project.

Here is why I think you should follow this rule, unless you already do:

More...

by Dmitry Kirsanov

30. March 2012 21:37

The problems of Search Engine Optimization are usually considered to be the problems of the marketing division of the company, and system administrators or software developers almost always have no clue when what they do causes significant troubles for website marketing.

Today we will talk about one of the biggest problems you may encounter in Search Engine Optimization – the content duplication problem, and what you, as web developer, system administrator or website owner, should do to prevent it.

More...

by Dmitry Kirsanov

30. March 2012 09:58

In this short video, which is rather addition to the 4th part of the PowerShell introduction videos for Windows system administrators, I am showing the basic concepts of script flow control.

This is very natural and basic for software developers, but system administrators with no prior experience in Windows PowerShell may find it very useful.

In this particular example we are connecting to the remote computer and listing it’s network adapters, to do different things depending from whether these adapters are DHCP enabled or not.

More...

by Dmitry Kirsanov

29. March 2012 17:25

This is my first article about the hiring process, even though I’ve been on both sides of the barricades for many years and for a long time thought and even taught people about some aspects of hiring process, as well as accumulated knowledge from them.

This is my first article about the hiring process, even though I’ve been on both sides of the barricades for many years and for a long time thought and even taught people about some aspects of hiring process, as well as accumulated knowledge from them.

As you know, I am training IT specialists, and their reason for training, either explicitly expressed or subtle, is to find a better opportunity which would return the investment into training. In other words – to change the job.

Even though it’s not the beginning nor the end of the hiring process, and even not the most important part of it, the cover letter could either “make or break” the first impression of the HR (human resources) manager of your future employer.

More...

by Dmitry Kirsanov

22. March 2012 17:42

One of the qualities of the PowerShell, one of the scales to mark it’s success was the Security. It is also the first question asked when someone new to PowerShell is trying to run the PowerShell script.

The previous generations of scripting environments, like the Windows Scripting Host with it’s notorious VBS files sent automatically over e-mail by all sorts of worms and trojans – they cried for better security, and not only in terms of getting over the problems, but also in terms of applying newest standards and technologies.

So this video training article is about the security in Windows PowerShell. More...