Each day I am creating so many temporary files that I can’t really give you a count. Sometimes it’s thousands. Opening attachments from e-mails and instant messengers, saving images from internet for a single use, opening archives, deploying software and many other tasks – they all create temporary files that may stay in a hard drive for years.

By temporary files I don’t mean the files created by applications to temporarily store data. I mean the actual user files you don’t intend to use in the future.

Another type of temporary files is log files. Usually we have them on servers. Web server logs, mail server, your own application that creates a set of log files each day – usually you don’t need to store these files for more than a few months. Especially if these files are stored on virtual machines, like Azure or Amazon, where you are paying for each megabyte of storage.

There are two aspects in temporary files that may justify doing something about them:

1. They take space or they are produced in numbers that decrease the performance of file system. The file doesn’t have to take all the space of the drive – it’s enough to have thousands of files in one directory to make Windows freeze every time it’s trying to find and list these files. For some directories we would prefer to have a threshold of a particular time after which these files should vanish.

2. They may contain sensitive information that you wouldn’t want to leave behind. Of financial, medical, business, political or any other nature – when the file has expired, there is no need to keep it, but some files may require special care in form of secure erase.

The tools we need

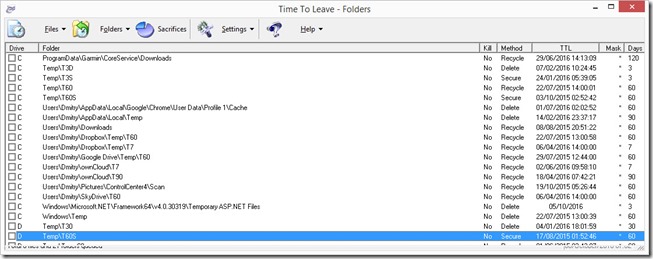

In 2006, 10 years ago, I wrote a windows application that I called “Time To Leave”. It wasn’t really popular, but it made my life so much easier – it was monitoring directories on servers and workstation or old files, and then deleted it by any of 3 selected method – normal, secure or move to recycle bin.

It was working as either utility to execute on user startup or windows service. I am still using it everywhere, but due to the fact that it was written long time ago using Visual Basic 6, I can’t really support it or introduce new features – I nearly forgot the language.

Having such a powerful tool, I inevitably came to practice that now helps me to keep both servers and workstations clean and tidy, and that’s what this article is about.

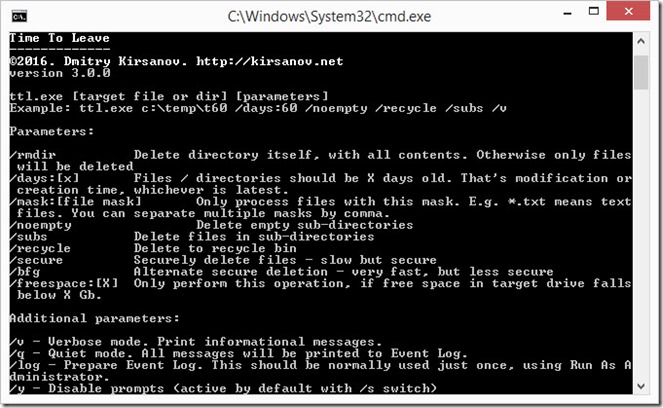

Speaking about the tools, a few hours ago I completed another tool which I called “Time To Leave 3.0”, and it is a command line utility. I found that my old TTL 2 doesn’t delete empty directories and instead of trying to solve it by editing the obsolete code, I simply created what I could easily use in batch files on modern servers.

Although it doesn’t work as Windows service, it can easily be used in batch files and / or Windows task scheduler. It works basically the same as the old version, but much faster.

The new utility requires .NET Framework 4.5, hence it can’t be used in Windows XP (the last .NET Framework version that could be used in Windows XP or Windows Server 2003 is 4.0). But older application can be used there, so there is no problem.

The executable is just 18Kb but it is shipped with Sysinternals SDelete, which can be downloaded here: https://docs.microsoft.com/en-us/sysinternals/downloads/sdelete

The Practice

1. Know where you save, and prepare the environment

As you may notice from the screen shots above, I am using the directory structure that is self-describing and consistent. I usually have one directory called Temp, and inside a number of directories named like T[Number of Days][Type of data]. For example T60 means it’s Temporary directory, where files are kept for 60 days and after that recycled. Recycling for me is the default action, hence I have no additional marker for that. The T60S means that files will be Securely deleted. T60D means that files will be deleted after 60 days.

All temporary directories whose names end with S are using NTFS encryption. It’s not a “military grade” protection, but is a must have if these files should not be viewed by other users of your computer or if saved on machine that you don’t control all the time (e.g. office, travel). If the hard drive is using the shadow copies or other backups, I make sure that these directories are not covered by backup.

The reason to have different directories is that some files have naturally longer life time than others. For example, I may download the file only to send it by e-mail or post online, and I won’t need it after that. The desired life span for such file is about one day, so I am saving it to T3D. And if that file is NSFW or contains financial information – to T3S.

And the same thing with cloud storage – in Dropbox, Google Drive or OneDrive, it is better to have a directory for temporary files, so that when you are sharing a file with someone, you can be sure it will be deleted after some time and won’t consume your quota. When sharing, I am usually using T90.

2. Sort your refuse

I wouldn’t recommend to use the Downloads directory that is the default location to save files from the Internet. Sort your files from the very beginning. If you are downloading the setup file that you will require right now but may want to store later, just save it to T60, and in that case, if you forgot about it for 2 months, it will be deleted and stop using your drive space. I’ve seen files in people’s Downloads directory that are 4 years old, sometimes large ISO images. In my environment it’s just impossible.

3. Security

One thing I already mentioned is using NTFS encryption, when possible. Regarding the secure deletion, there are two ways TTL is deleting files – using SDelete and alternate method which renames and partially overwrites the file, and then normally deletes it.

SDelete is trying to delete the file properly, with erasing of free space on the drive, and that consumes a lot of time. The BFG (“/bfg switch”) only ensures that the file won’t be recovered from conventional hard drive (i.e. not SSD) by renaming it and overwriting it with 32Kb of zero bytes.

I am planning to introduce the BFG2 method, which will damage every second byte of the file, then rename and delete it. This would make any document unreadable, even plain text file, but again it doesn’t aim to be really secure method. For real security use the slow /secure method.

The reason behind the BFG is to enable quick way to delete file which would make it impossible for company system administrator to restore your deleted files. It also doesn’t break your hard drive in the process.

Update 27/10/2016

Updated the installation package to version 3.1.0. Main difference is the support for new switch:

/keep:[number of files]

This switch allows you to keep any number of files, even though they are old. This may be useful when you want to get rid of old logs or saved games, but want to keep the most recent ones, no matter how old they are. Here is an example: you have a directory with 30 files, and 5 of them are recent, 25 are old. Normally, TTL would delete these 25 files. But you want to keep 10 files alive at all the time. With command line switch /keep:10, TTL would delete only 20 files. The setting is per directory, not per root path. So if you have 2 sub-directories, TTL would keep 15 files in each directory.

Update 29/09/2022

Better late than never! TTL became open-source (though it wasn't hard to decompile it earlier either), and is available at https://github.com/dmki/TTL !