Nearly all mobile devices (not necessarily cell phones, but anything that’s mobile enough) these days have sensors. And that will be our topic for today.

By the way, the way the term “mobile” is used today, reminds me an old anecdote with lady asking an IT guy, who tried to explain the difference between floppy disk and hard disk, whether that floppy disk isn’t hard enough for him. So, let’s settle on the definition that mobile devices are not stationery.

As we found previously, Windows 8 supports many platforms, and each platform has it’s own sensors, and new sensors are invented and need to be supported by OS.

Also, you may find out that your mobile device has more sensors than listed in your device specs. For instance, you may enjoy such sensors as compass and inclinometer, even though you have no such hardware in your device, and that’s because some sensors are “fusion”, or “virtual” – i.e. their data are results of computational analysis of data from other, “real” or “raw” sensors.

Using hardware resources in Windows 8

There are few things you should know about sensors and devices at all.

First of all: some of them can only be accessed by one application at the same time. It can be explained using various reasons, but the most important of them, which explains a lot about how Windows 8 works, is the fact that new Windows is all about saving resources, that is – energy, and doing more (work) with less (powerful hardware). Quoting once again the German-American architect Ludwig Mies van der Rohe, the less is more.

You can find the same principle in the UI concept of Windows 8, where less graphical “enhancements” provide more space for content, but it’s also true for the way that Windows 8 works with hardware – by consuming less hardware it provides you with more time to work, and also more features, since resources are not locked by other applications.

For example – the camera. Only one application can use it at any given time. If your application is using the camera, no one else could. But once your Metro application is deactivated (for example – user pressed Start button on his tablet or phone and switched to the Start menu), that resource is taken from your application and other app can use it.

This calls for some logical exercises. Like – you were using the resource, then your application was deactivated and after the re-activation you are attempting to use that resource again. And fail, because it was not re-connected. Which means, that you should always keep the track on what resources you are using and whether they need to be re-connected.

Sensor Types

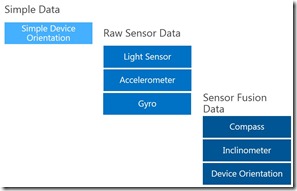

As I said in the beginning, sensors can be divided into three groups – simple, raw and fusion.

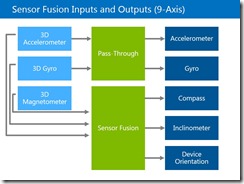

Quite obviously, fusion sensors need raw sensors to work in order to calculate their own data. Meaning that Compass will need both 3D Gyro and 3D Magnetometer to be powered on and functioning, in order to provide your application with the direction to True North.

Simple sensor level is the easiest way to get information about things like flipping or rotation of device. Combine it with ambient light sensor and now you have many more use scenarios for your application. How about the 3D model of the environment, where user can use his tablet as the “window” into your, say, car shop?

Raw sensors are for complex scenarios, when you know what to do with the data. For example, the ambient light sensor provides you with value in LUX units (lumens per m2). I could use that particular sensor for measuring whether there is enough light in public place (a standard is about 100 lux) and use it to compare with how many customers we have in that room and maybe even adjust lights automatically.

Fusion sensors are based on data, received from raw sensors. Since fusion sensors are not really devices, there are no hardware implications with locking the device, and no same algorithm to use two different sensors.

Some sensors and other data-providing devices are faster than others, as some things are easier to measure. For example – Light Sensor just measures the luminous emittance, which is very fast, while GPS (which is not sensor, but provides data the same way as one) needs a minute (depending from hardware, but it’s never below 40 seconds for “cold start”) to receive and analyze data from satellites. But Windows 8 is quite smart, so for example, in Windows Phone 8, when you are requesting the GPS position, you can get the last registered position without waiting for GPS. Quite handy, especially taking into account the amount of energy used by typical GPS device.

That’s why you need to test your applications on physical device, preferably a few, from different vendors, as different sensor and geolocation devices may behave differently. Preferably, invest your time and money into engaging your users in beta tests, so you’ll get more insight of how your application is working with faulty [Company Name] sensor.

Remember, that Windows Store is rating-driven, so it’s better to get information about faults from your users directly, not by reading about their adventures in Windows Store.

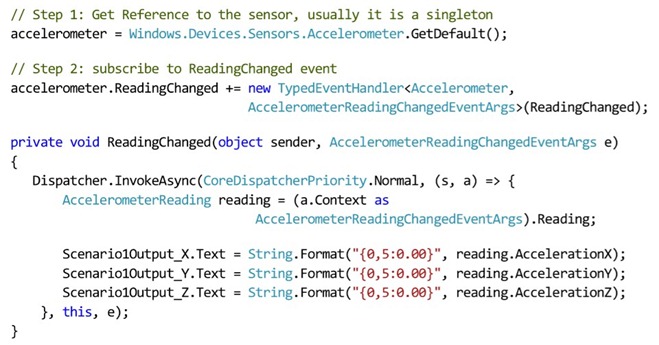

You can find all sensors in Windows.Devices.Sensors namespace, and here is how you can use them:

Sensor Events

First of all, you connect to the device, then you subscribe to it’s events. And reading them. Let’s take an Accelerometer as an example:

As you may notice – everything is pretty straightforward. Of course, different sensors have different properties and events, but the principle is the same.

Interesting Facts:

- Accelerometer is the only sensor which is part of Windows Phone specifications. Other sensors may not be there, so you should always check.

- Some sensors only support limited amount of instances per application. Always check MSDN articles about every specific class before making decision about the architecture of your application.

We’ll cover sensors a bit later in some examples. But for now, here is the sample code to play around and see how it works:

SteeringWheel.7z (2.79 mb)